Retrospectives should be a core practice for any team but you must have a way to measure their success and team engagement otherwise they can become just another meeting.

The retrospective should generate a quantitatively measurable experiment/action item and the team engagement metrics will generate qualitative measurements.

In this article I’ll explain why they’re both critical to retrospective success. Folks that have been in retrospectives and wondered “what’s the point of this meeting?” should get a better understanding of their purpose and facilitators/scrum masters will learn when/how to measure retrospective engagement and success metrics.

Preamble

You retrospective must be a safe environment or people won’t speak their mind. I gave a 20 minutes talk at the Sydney Pivotal office about How to measure retrospective success and I share ideas to check your retrospective safety and how to improve it. If you’re interested in retrospectives I gave a talk about switching retrospective formats loosely based on my blog post.

Why we run retrospectives?

The right mindset for a retrospective requires the team to understand and agree that today we know more then we did last week. I think Kerth’s prime directive captures that:

Regardless of what we discover, we understand and truly believe that everyone did the best job they could, given what they knew at the time, their skills and abilities, the resources available, and the situation at hand.

I think the agile manifesto captures the spirit of why we run retrospectives:

At regular intervals, the team reflects on how to become more effective, then tunes and adjusts its behavior accordingly.

You want to run retrospectives at regular intervals because doing it “when we need it” is like seeking urgent dental care after you pop a decade old filling–and half your tooth–instead of preventing that with regular checkups. Regular intervals will allow you to measure how the team feel about retrospective–more on this later.

You want the team to reflect on how to become more effective because acting on individual issues might fix the symptoms instead of the root cause. Reflect on hard data and feelings then look for patterns, generate insights. Take time to reflect and don’t rush to find a solution for each item that comes up like if you were playing a whack a mole game.

You want the team to commit to tune and adjust its behavior with an agreed and quantitatively measurable experiment.

Measure your retrospective success

I like to use the S.M.A.R.T. experiment–SMART is a simple acronym to remind us to come up with an experiment that is:

Specific:

We should pair program more isn’t very specific. Instead use: Pair program everyday 4 hours per day.

Measurable:

You can measure how many times you Pair program 4 hours per day. You should also measure hard data like velocity, delivered stories, bugs.

This measurement should be quantitative and related to how effective your team is.

Achievable:

Use an experiment that generate consensus with the team. It won’t be a good idea to come up with Pair program everyday 8 hours per day when you know people are skeptic about it. Find a more suitable experiment, maybe Pair program 2 days per week.

Use an experiment that makes the team step out of their comfort zone without attempting to fix things clearly out of reach.

Relevant:

Why do you want to pair program? Because someone told you so? Not very relevant or meaningful.

Perhaps someone called out that Jack was sick 4 days and we could not work on the iOS react native UI components and also designers are not available to clarify how user interface should work so you might want to break knowledge silos that block team progress. That’s one great use of pair programming!

Timebound:

Do not use an open ended experiment. Make sure there is an end to it like we will try pair programming for 2 weeks and then decide to continue or adjust the experiment. Having a timebound experiment should create common ground between optimists and skeptics and facilitate trying new things.

Here’s our measurable SMART experiment:

We want to break knowledge silos that often block our team progress. In order to do that developers will pair program 4 hours a day for the next 2 weeks. Designers will pair program 1hour a day with developers to clarify and learn app internal behavior We will keep track of how many times we do that on the pair programming sheet on our shared workspace. In 2 weeks we will review this experiment and decide if we want to continue or adjust it.

I started the article explaining how to measure your retrospective success to address the quantitative measurement first but the experiment is formulated in the middle of the retrospective. When you start your retrospective you should get a qualitative measurement on team engagement.

Measure team engagement (ESVP)

When you start retrospective you should spend 5/10 minutes measuring the team engagement. Depending on how safe it is you can make it anonymous or not.

I like to do that using an activity–from Derby and Larsen’s book–called ESVP. You ask the team which role between Explorer, Shopper, Vacationer, Prisoner represents best how they feel about today’s retrospective:

Explorers

They want to make the most out of today’s retrospective. Learn everything they can about the iteration/project, leave no stone unturned.

Shoppers

They’re happy to be part of the retrospective and want to actively look for something to put in their cart.

Vacationers

They’re not interested in retrospective but they rather be in it then doing actual work at their desk.

Prisoners

They’ve been forced to sit in this meeting but don’t see a point in it and won’t be actively participating.

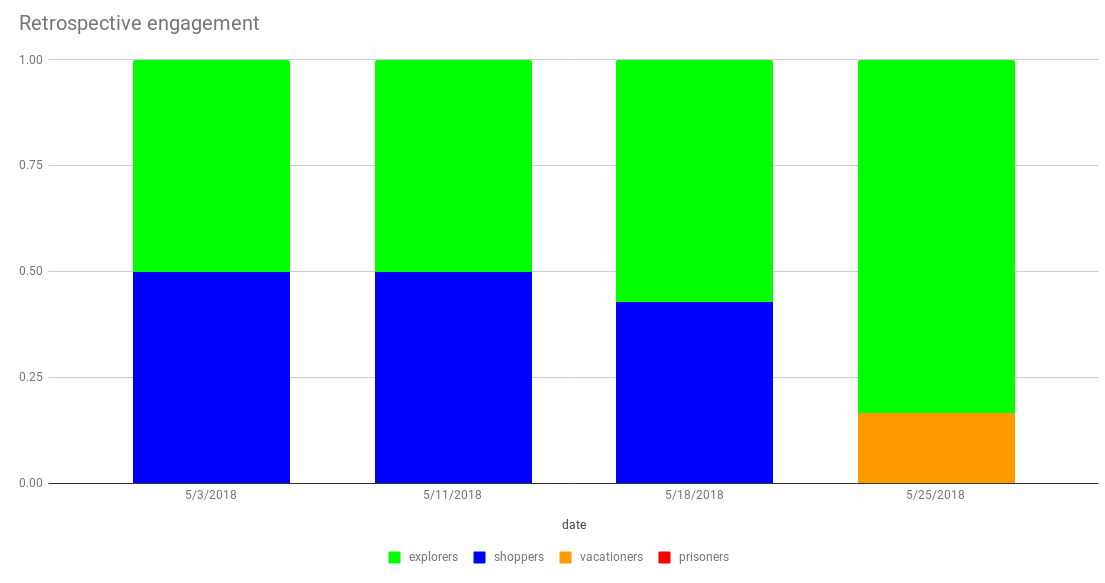

Collecting these at regular intervals will allow you to learn when the team is shifting from engaged to disengaged and adjust your retrospective accordingly.

It will also allow you to celebrate when the team shifts the other way around! Learn what you’re doing well and try do more of it or share it with other teams in your organization.

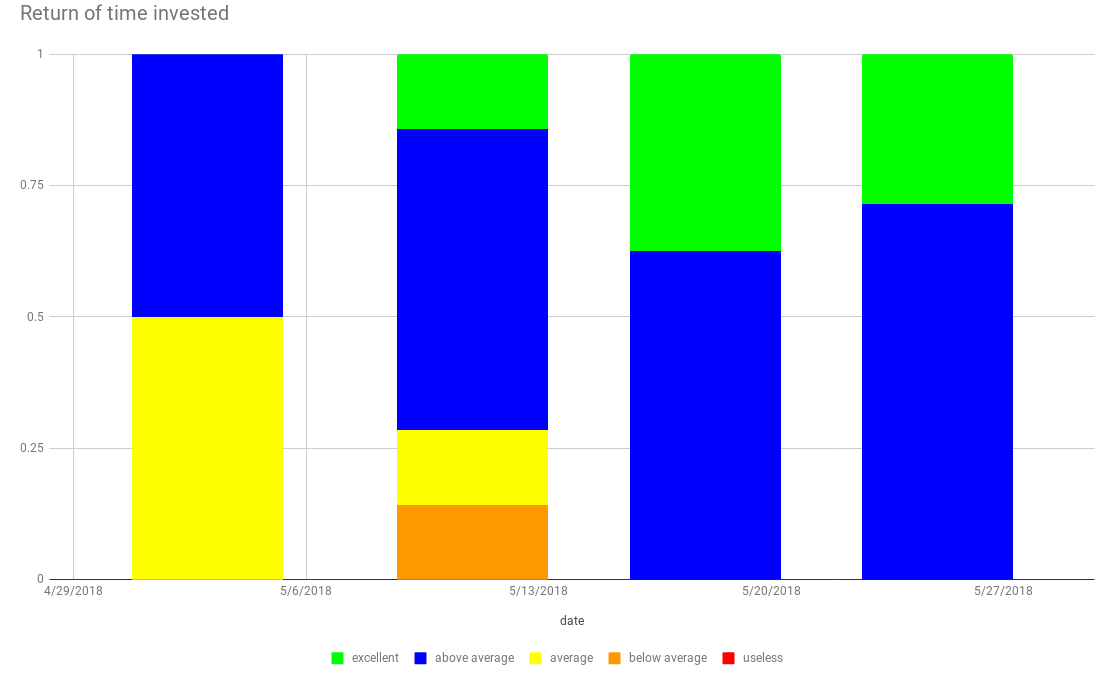

Measure the return of time invested (ROTI)

Before you finish retrospective you should spend 5/10 minutes measuring how well we spent the allocated time. I usually ask the team how was the return of time invested for this meeting between 0 and 4 where

4: Excellent

Means this meeting was invaluable and if you skipped it you would have missed some key piece of information. You’re very glad you were in it.

3: Above average

This meeting was a good use of your time you learned new things.

2: Average

This meeting gave you enough to justify your presence for the time spent on it.

1: Below average

You gained some value but it was mostly a waste of time. This meeting was below average and wasn’t worth 100% of your time.

0: Useless

This meeting was a waste of time. You gained nothing and wished you skipped it.

After they choose a number ask one suggestion/idea to improve by one point. The retrospective is coming to an end so tell the team we won’t engage in long discussions about those ideas but rather collect them and review them to adjust our next retro. Timebox each person to 60 seconds.

Conclusion

Making your team more effective doesn’t need to tackle huge issues all the time. What is critical is to measure and review the experiment progress at regular intervals so the team can trust retrospective to be a meaningful tool to adjust their process.

Keep a constant eye on how the team feels about retro because failing to do so might generate a room full of disengaged prisoners that believe they’re wasting their time making your experiment not achievable.

Are you going to try some of the suggested strategies? Do you already measure your retrospectives? What challenges do you face?